Pandas DataFrame Output for sklearn Transformers

Author:

Sangam SwadiK

Sangam SwadiK

Video

Upcoming feature in release 1.2

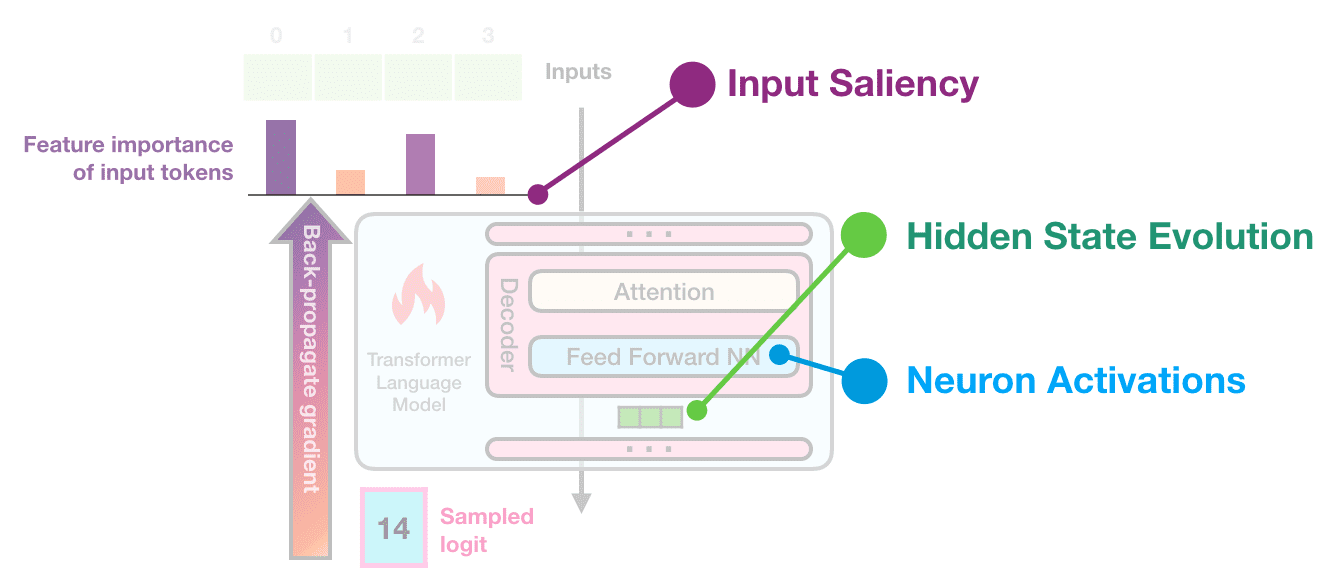

Starting with the next release of scikit-learn (v1.2), pandas dataframe output will be available for all sklearn transformers! This will make running pipelines on dataframes much easier and provide better ways to track feature names. Previously, mapping a transformed output back into columns would be cumbersome as it might not be a one-to-one mapping in cases of complex preprocessing (e.g., polynomial features).

The pandas dataframe output feature for transformers solves this by tracking features generated from pipelines automatically. The transformer output format can be configured explictly for either numpy or pandas output formats as shown in sklearn.set_config and the sample code below.

from sklearn import set_config

set_config(transform_output = "pandas")

See the sample notebook, pandas-dataframe-output-for-sklearn-transformer.ipynb and documentation for a more detailed example and usage.

Links to documentation and example notebook

Reporting bugs

We’d love your feedback on this. In case of any suggestions or bugs, please report them at

scikit-learn issues

Thanks 🙏🏾 to maintainers: Thomas J. Fan, Guillaume Lemaitre , Christian Lorentzen !!