Large language models (LLMs) have received a great deal of attention over the past year, since the dramatic release and meteoric rise of ChatGPT. Many other models and tools have been developed and refined in the intervening time. LLMs have their flaws but are incredibly powerful—among other things, they can answer questions, write stories, compose poetry and songs, and craft code. We’re still in the early stages of learning what these tools can do for us.

But—how do these models work? How is text processed, represented, stored, and produced by LLM-based systems? The deep neural networks that LLMs are based on are only able to work with numbers—lots and lots of numbers. How do we take text and convert it to a form that a deep learning model can engage with? And then, how do we store that converted data in a manner that is efficient and useful to access?

This is where vectors and embeddings come in. Vectors are ordered groupings of numbers, where each number has a certain meaning—and, since they’re made up of numbers, vectors are a data structure that ML models can interface with. The question is then how to convert text into vectors in a consistent way, and that’s where embeddings enter the picture: they’re methods for converting text into vectors and back, and thus they represent a “translation layer” between what we humans can understand, and what an LLM can understand.

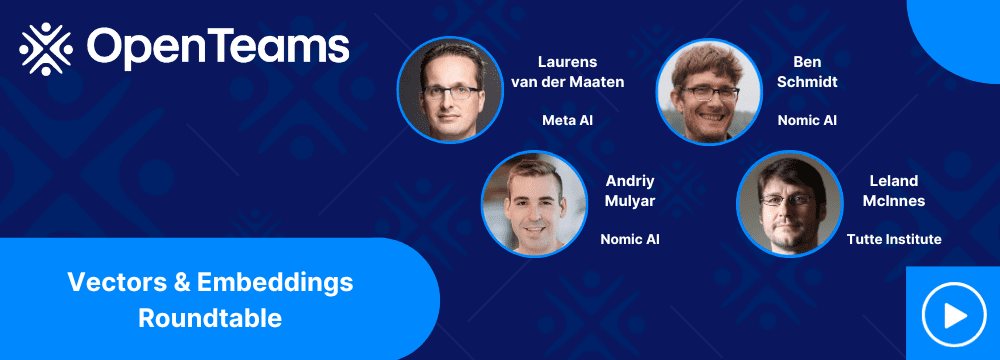

In this event, we have a great roundtable panel lined up for you. Andriy Mulyar and Ben Schmidt of Nomic AI, Laurens van der Maaten of Meta AI, and Leland McInnes of the Tutte Institute for Mathematics and Computing are here to shed some light on embeddings and vectors, and the challenges involved with storing, searching, and visualizing vector datasets.

Vectors and embeddings are a key topic underlying the magic of generative AI, and we hope you leave this event with a much better understanding of them and how to work with them!

View the recording here.