Interfaces for Explaining Transformer Language Models

window.ecco = {};

let dataPath = ‘/data/’;

let ecco_url = ‘/assets/’;

let dataPath = ‘/data/’;

let ecco_url = ‘/assets/’;

import * as explainingApp from “/js/explaining-app.js”;

function showRefreshWarning(){

var warning = document.getElementById(“warning”);

warning.style.display = “block”;

warning.innerHTML = ‘Please refresh the page. There was an error loading the scripts on the page. If the error presists, please let me know on Github.’

}

// Show the hero explorables, even in homepage preview

explainingApp.vizHeroSaliency();

explainingApp.vizHeroFactors();

//

// Only process citations on the page, not in homepage preview

if (window.location.pathname ==’/explaining-transformers/’){

try{

explainingApp.vizShakespeare();

explainingApp.EUSaliency();

explainingApp.vizOnes();

explainingApp.vizAnswer();

explainingApp.saliencyFormulas();

explainingApp.vizCounting();

explainingApp.vizCountingTwoFactors();

explainingApp.vizCountingFiveFactors();

explainingApp.vizEUFactors();

explainingApp.vizXMLFactors();

explainingApp.vizPianoFactors();

}

catch(err){

showRefreshWarning()

}

explainingApp.citations();

}

.toc li{

margin-bottom:0px;

list-style-type: none;

}

.toc{

border-bottom: 1px solid rgba(0, 0, 0, 0.1);

font-size:80%;

}

.toc ul{

margin-top: 0;

}

.toc h3{

/*font-size:90%;*/

margin-bottom:5px;

}

Interfaces for exploring transformer language models by looking at input saliency and neuron activation.

Tap or hover over the output tokens:

Explorable #2: Neuron activation analysis reveals four groups of neurons, each is associated with generating a certain type of token

Tap or hover over the sparklines on the left to isolate a certain factor:

<!–

Contents

–>

The Transformer architecture

has been powering a number of the recent advances in NLP. A breakdown of this architecture is provided here . Pre-trained language models based on the architecture,

in both its auto-regressive (models that use their own output as input to next time-steps and that process tokens from left-to-right, like GPT2)

and denoising (models trained by corrupting/masking the input and that process tokens bidirectionally, like BERT)

variants continue to push the envelope in various tasks in NLP and, more recently, in computer vision. Our understanding of why these models work so well, however, still lags behind these developments.

This exposition series continues the pursuit to interpret

and visualize

the inner-workings of transformer-based language models.

We illustrate how some key interpretability methods apply to transformer-based language models. This article focuses on auto-regressive models, but these methods are applicable to other architectures and tasks as well.

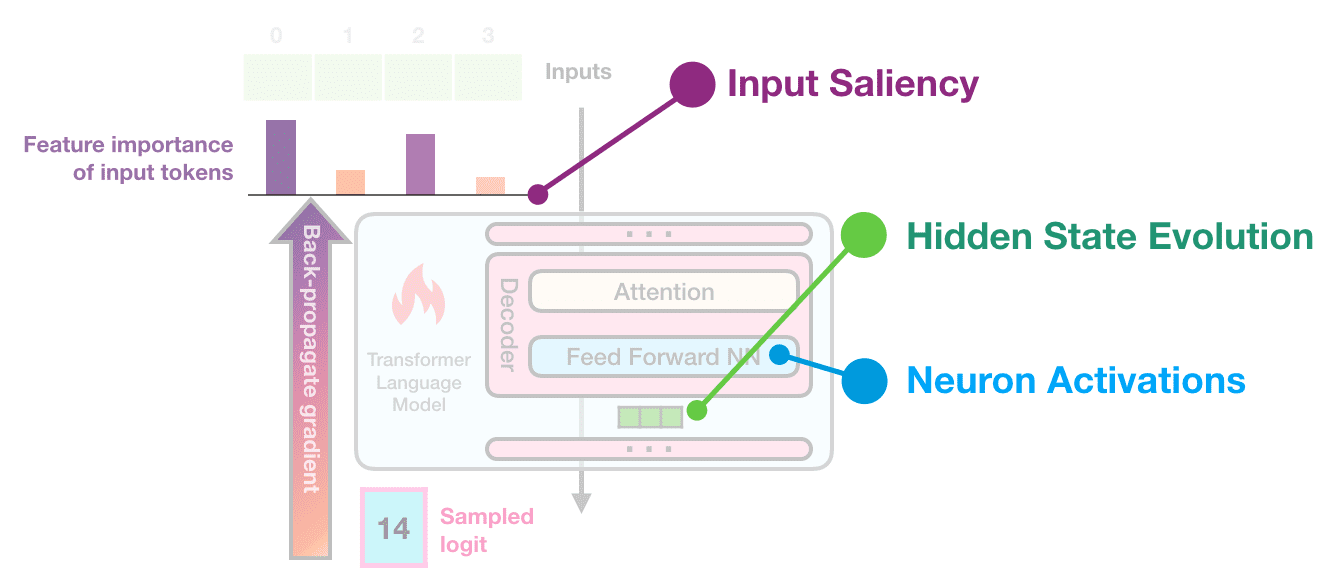

This is the first article in the series. In it, we present explorables and visualizations aiding the intuition of:

- Input Saliency methods that score input tokens importance to generating a token.

-

Neuron Activations and how individual and groups of model neurons spike in response to

inputs and to produce outputs.

The next article addresses Hidden State Evolution across the layers of the model and what it may tell us about each layer’s role.

<!–

leads to visualizations that are more faithful to its role.

–>

In the language of Interpretable Machine Learning (IML) literature like Molnar et al., input saliency is a method that explains individual predictions. The latter two methods fall under the

umbrella of “analyzing components of more complex models”, and are better described as increasing the transparency of transformer models.

Moreover, this article is accompanied by reproducible notebooks and Ecco – an open source library to create similar

interactive interfaces directly in Jupyter notebooks

for GPT-based models from the HuggingFace transformers library.

If we’re to impose the three components we’re examining to explore the architecture of the transformer, it would look like the following figure.

Figure: Three methods to gain a little more insight into the inner-workings of Transformer language models.

By introducing tools that visualize input saliency, the evolution of hidden states, and neuron activations, we aim to enable researchers to build more intuition about Transformer language models.

Input Saliency

When a computer vision model classifies a picture as containing a husky, saliency maps can tell us whether the classification was made due to the visual properties of the animal itself, or because of the snow in the background. This is a method of attribution explaining the relationship between a model’s output and inputs — helping us detect errors and biases, and better understand the behavior of the system.

Figure: Input saliency map attributing a model’s prediction to input pixels.

Multiple methods exist for assigning importance scores to the inputs of an NLP model. The literature is most often concerned with this application for classification tasks, rather than natural

language generation. This article focuses on language generation. Our first interface calculates feature importance after each token is generated, and by

hovering or tapping on an output token, imposes a saliency map on the tokens responsible for generating it.

The first example for this interface asks GPT2-XL for William Shakespeare’s date of birth. The model is correctly able to produce the date (1564, but broken into two tokens: ” 15″ and “64”, because the model’s vocabulary does not include ” 1564″ as a single token). The interface shows the importance of each input token when generating each output token:

year using Gradient × Input.

Tap or hover over the output tokens.

GPT2-XL is able to tell the birth date of William Shakespeare expressed in two tokens. In generating the

first token, 53% of the importance is assigned to the name (20% to the first name, 33% to the last name).

The next most important two tokens are ” year” (22%) and ” born” (14%). In generating the second token to

complete the date, the name still is the most important with 60% importance, followed by the first portion

of the date — a model output, but an input to the second time step.

This prompt aims to probe world knowledge. It was generated using greedy decoding. Smaller variants of GPT2

were not able to output the correct date.

Our second example attempts to both probe a model’s world knowledge, as well as to see if the model

repeats the patterns in the text (simple patterns like the periods after numbers and like new lines, and

slightly more involved patterns like completing a numbered list). The model used here is DistilGPT2.

This explorable shows a more detailed view that displays the attribution percentage for each token — in case you need that precision.

Tap or hover over the output tokens.

This was generated by DistilGPT2 and attribution via Gradients X Inputs. Output sequence is cherry-picked to

only include European countries and uses sampled (non-greedy) decoding. Some model runs would include China,

Mexico, and other countries in the list. With the exception of the repeated ” Finland”, the model continues

the list alphabetically.

Another example that we use illustratively in the rest of this article is one where we ask the model to complete

a simple pattern:

alternating pattern of commas and the number one.

Tap or hover over the output tokens.

Every generated token ascribes the first token in the input the highest feature importance score. Then

throughout the sequence, the preceding token, and the first three tokens in the sequence are often the most

important. This uses Gradient × Inputs on GPT2-XL.

This prompt aims to probe the model’s response to syntax and token patterns. Later in the article, we build

on it by switching to counting instead of repeating the digit ‘ 1’. Completion gained using greedy decoding.

DistilGPT2 is able to complete it correctly as well.

It is also possible to use the interface to analyze the responses of a transformer-based conversational agent.

In the following example, we pose an existential question to DiabloGPT:

Tap or hover over the output tokens.

This was the model’s first response to the prompt. The question mark is attributed the highest score in the

beginning of the output sequence. Generating the tokens ” will” and ” ever” assigns noticeably more importance to

the word ” ultimate”.

This uses Gradient × Inputs on DiabloGPT-large.

About Gradient-Based Saliency

Demonstrated above is scoring feature importance based on Gradients X Inputs— a gradient-based saliency method shown by Atanasova et al.

to perform well across various datasets for text classification in transformer models.

To illustrate how that works, let’s

first recall how the model generates the output token in each time step. In the following figure, we see how

①

the

language model’s final hidden state is projected into the model’s vocabulary resulting in a numeric score for

each

token in the model’s vocabulary. Passing that scores vector through a softmax operation results in a probability

score for each token. ② We proceed to select a token

(e.g. select the highest-probability scoring token, or sample from the top scoring tokens) based

on

that vector.

Figure: Gradient-based input saliency

③ By calculating the gradient of the

selected logit (before the softmax) with respect to the inputs by back-propagating it all the way back to the

input tokens, we get a signal of how important each token was in the calculation resulting in this generated

token.

That assumption is based on the idea that the smallest change in the input token with the highest

feature-importance

value makes a large change in what the resulting output of the model would be.

Figure: Gradient X input calculation and aggregation

The resulting gradient vector per token is then multiplied by the input embedding of the respective token. Taking

the L2 norm of the resulting vector results in the token’s feature importance score. We then normalize the

scores by dividing by the sum of these scores.

More formally, gradient × input is described as follows:

∥∇Xifc(X1:n)Xi∥2 lVert nabla _{X_i} f_c (X_{1:n}) X_ilVert_2

Where is the embedding vector of the input token at timestep i, and is the back-propagated gradient of the score of the selected token unpacked as follows:

-

is the list of input token embedding vectors in the input sequence (of length

) -

is the score of the selected token after a forward pass through the model (selected through any one of a number of methods including greedy/argmax decoding, sampling, or beam search).

With the c standing for “class” given this is often described in the classification context. We’re keeping the notation even though in our case, “token” is more fitting.

This formalization is the one stated by Bastings et al. except the gradient and input vectors are multiplied element-wise. The resulting vector is then aggregated into a score via calculating the L2 norm as this was empirically shown in Atanasova et al. to perform better than other methods (like averaging).

Neuron Activations

The Feed Forward Neural Network (FFNN) sublayer is one of the two major components inside a transformer block (in

addition to self-attention). It accounts for 66% of the parameters of a transformer block and thus provides a

significant portion of the model’s representational capacity. Previous work

has examined neuron firings inside deep neural networks in both the NLP and computer vision domains. In this

section we apply that examination to transformer-based language models.

Continue Counting: 1, 2, 3, ___

To guide our neuron examination, let’s present our model with the input “1, 2, 3” in hopes it would echo the

comma/number alteration, yet also keep incrementing the numbers.

It succeeds.

By using the methods we’ll discuss in Article #2 (following the lead of nostalgebraist), we can produce a graphic that exposes the probabilities of output tokens after each layer in the model. This looks at the hidden state after each layer, and displays the ranking of the ultimately produced output token in that layer.

For example, in the first step, the model produced the token ” 4″. The first column tells us about that process. The bottom most cell in that column shows that the token ” 4″ was ranked #1 in probability after the last layer. Meaning that the last layer (and thus the model) gave it the highest probability score. The cells above indicate the ranking of the token ” 4″ after each layer.

By looking at the hidden states, we observe that the model gathers confidence

about the two patterns of the

output sequence (the commas, and the ascending numbers) at different layers.

layers of

the model are more comfortable predicting the commas as that’s a simpler pattern. It is still able to

increment

the digits, but it needs at least one more layer to start to be sure about those outputs.

What happens at Layer 4 which makes the model elevate the digits (4, 5, 6) to the top of the probability

distribution?

We can plot the activations of the neurons in layer 4 to get a sense of neuron activity. That is what the first of the following three figures shows.

It is difficult, however, to gain any interpretation from looking at activations during one forward pass through the model.

The figures below show neuron activations while five tokens are generated (‘ 4 , 5 , 6’). To get around the

sparsity of the firings, we may wish to cluster the firings, which is what the subsequent figure shows.

|

token ‘ 4’ Each row is a neuron. Only neurons with positive activation are colored. The darker they are, the more intense the firing. |

|

|

Each row corresponds to a neuron in the feedforward neural network of layer #4. Each column is that neuron’s status when a token was generated (namely, the token at the top of the figure). A view of the first 400 neurons shows how sparse the activations usually are (out of the 3072 neurons in the FFNN layer in DistilGPT2). |

|

|

To locate the signal, the neurons are clustered (using kmeans on the activation values) to reveal the firing pattern. We notice:

|

If visualized and examined properly, neuron firings can reveal the complementary and compositional roles that can be played by individual neurons, and groups of neurons.

Even after clustering, looking directly at activations is a crude and noisy affair. As presented in

Olah et al.,

we are better off reducing the dimensionality using a matrix decomposition method. We follow the authors’

suggestion to use Non-negative Matrix Factorization (NMF) as a natural candidate for reducing the dimensionality

into groups that are potentially individually more interpretable. Our first experiments were with Principal Component Analysis (PCA), but NMF

is a better approach because it’s difficult to interpret the negative values in a PCA component of neuron

firings.

Factor Analysis

By first capturing the activations of the neurons in FFNN layers of the model, and then decomposing them into a

more manageable number of factors (using) using NMF, we are able to shed light on how various neurons contributed towards each generated token.

The simplest approach is to break down the activations into two factors. In our next interface, we have the model

generate thirty tokens, decompose the activations into two factors, and highlight each token with the factor

with the highest activation when that token was generated:

Tap or hover over the sparklines on the left to isolate a certain

factor

Factor #1 contains the collection of neurons

that

light up to produce a number. It is a linear transformation of 5,449 neurons (30% of the 18,432 neurons in

the FFNN layers: 3072 per layer, 6 layers in DistilGPT2).

Factor #2 contains the collection of neurons

that light up to produce a comma. It is a linear transformation of 8,542 neurons (46% of the FFNN neurons).

The two factors have 4,365 neurons in common.

Note: The association between the color and the token is different in the case of the input tokens and

output tokens. For the input tokens, this is how the neurons fired in response to the token as an

input. For the output tokens, this is the activation value which produced the token. This is why the

last input token and the first output token share the same activation value.

This interface is capable of compressing a lot of data that showcase the excitement levels of factors composed of

groups of neurons. The sparklines

on the left give a snapshot of the excitement level of each factor across the entire sequence. Interacting with

the sparklines (by hovering with a mouse or tapping on touchscreens) displays the activation of the factor on

the tokens in the sequence on the right.

We can see that decomposing activations into two factors resulted in factors that correspond with the alternating

patterns we’re analyzing (commas, and incremented numbers). We can increase the resolution of the factor

analysis by increasing the number of factors. The following figure decomposes the same activations into five

factors.

Tap or hover over the sparklines on the left to isolate a certain

factor

- Decomposition into five factors shows the counting factor being broken down into three factors, each

addressing a distinct portion of the sequence (start, middle, end). - The yellow factor reliably tracks

generating the commas in the sequence. - The blue factor is common across

various GPT2 factors — it is of neurons that intently focus on the first token in the sequence, and

only on that token.

We can start extending this to input sequences with more content, like the list of EU countries:

Tap or hover over the sparklines on the left to isolate a certain

factor

Another example, of how DistilGPT2 reacts to XML, shows a clear distinction of factors attending to different

components of the syntax. This time we are breaking down the activations into ten components:

Tap or hover over the sparklines on the left to isolate a certain

factor

Factorizing neuron activations in response to XML (that was generated by an RNN from

) into ten

factors

results in factors corresponding to:

- New-lines

- Labels of tags, with higher activation on

closing tags - Indentation spaces

- The ‘<' (less-than) character

starting XML tags - The large factor focusing on the first token. Common to GPT2 models.

- Two factors tracking the ‘>’ (greater than) character at the end of XML

tags - The text inside XML tags

- The ‘</' symbols indicating

closing XML tag

Factorizing Activations of a Single Layer

This interface is a good companion for hidden state examinations which can highlight a specific layer of

interest, and using this interface we can focus our analysis on that layer of interest. It is straight-forward

to apply this method to specific layers of interest. Hidden-state evolution diagrams, for example,

indicate that layer #0 does a lot of heavy lifting as it often tends to shortlist the tokens that make it to the

top of the probability distribution. The following figure showcases ten factors applied to the activations

of layer 0 in response to a passage by Fyodor Dostoyevsky:

Tap or hover over the sparklines on the left to isolate a certain

factor

Ten Factors from the activations the neurons in Layer 0 in response to a passage from Notes from Underground

by Dostoevsky.

- Factors that focus on specific portions of the text (beginning, middle, and end. This is interesting as the only signal

the model gets about how these tokens are connected are the positional encodings. This indicates the

neurons that are keeping track of the order of words. Further examination is required to assess

whether these FFNN neurons directly respond to the time signal, or if they are responding to a

specific trigger in the self-attention layer’s transformation of the hidden state. -

Factors corresponding to linguistic features, for example, we can see factors for pronouns (he, him), auxiliary verbs (would, will), other verbs (introduce, prove — notice the

understandable misfiring at the ‘suffer’ token which is a partial token of ‘sufferings’, a noun),

linking verbs (is, are, were), and a factor

that favors nouns and their adjectives

(“fatal rubbish”, “vulgar folly”). - A factor corresponding to commas, a

syntactic feature. - The first-token factor

We can crank up the resolution by increasing the number of factors. Increasing this to eighteen factors

starts to

reveal factors that light up in response to adverbs, and other factors that light up in response to partial

tokens. Increase the number of factors more and you’ll start to identify factors that light up in response

to

specific words (“nothing” and “man” seem especially provocative to the layer).

About Activation Factor Analysis

The explorables above show the factors resulting from decomposing the matrix holding the activations values of FFNN neurons using Non-negative Matrix Factorization. The following figure sheds light on how that is done:

Figure: Decomposition of activations matrix using NMF.

NMF reveals patterns of neuron activations inside one or a collection of layers.

Beyond dimensionality reduction, Non-negative Matrix Factorization can reveal underlying common behaviour of groups of neurons. It can be used to analyze the entire network, a single layer, or groups of layers.

<!–

Attention [Work in progress]

Attention is most commonly visualize in Sankey diagrams

. These diagrams have the benefit of

being able to give a snapshot of how multiple tokens are attending to different locations all in one figure.

Downsides to that perspective is the limited length of input or output sequences that can be shown on the

screen

at once. It could also be exposing an overwhelming amount of data if the focus of the reader is one token,

and

not the entire sequence. For these reasons, we believe Token Sparkbars provide a reasonable compliment to

attention visualization.

One caveat to traditional attention visualizations is that they communicate that a specific tokken attended

to a

set of previous tokens. This is most commonly mistaken

as raw attention weights show how a position

attended to position — the contents of these positions contain a mixture of the various tokens from the

previous layer. This misconception would be further reinforced if we impose the bars against the text using

token sparkbars. So instead of visualizaing raw attention, we visualize attention flow [Abnar] – which

calculates attention all the way down to the tokens:

[Work in progress]

Conclusion & Future Work

We demonstrated multiple visualizations and explorable interfaces to aid the analysis of Transformer language models spanning input saliency, hidden state evolution, and neuron activation factorization.

We see plenty of room to explore further methods and interfaces that improve the transparency of deep learning models including Transformer-based models. These include:

- Visualizing and examining other key components of transformer language-models. While attention is widely analyzed, and while this article shed more light on the work on hidden states and feed-forward neuron activations, components like decoding strategies are essential pieces of the puzzle which can benefit from interfaces to aid intuition building. This is especially the case when probing for world knowledge in models.

- Visualizations that combine multiple interpretability techniques as suggested in Olah et al.. Combining saliency with factors, for example, could shed more light on the roles of various factors and neurons.

- NLP visualization tools that communicate large amounts of data and impose them in word-sized graphics that are present in the flow of the text and right next to their respective tokens. We, for example, can now envision more compact displays for hidden state evolution (by imposing the ranking as a sparkline right next to the tokens in a paragraph).

- Visual tools which aid understanding complex black-models by visualizing the various components of these models, and of the data flowing through them, and of how they behave as systems. We can envision applying to attention an explorable similar to the input saliency visualization demonstrated in this work. We would like to see it done with attention flow, however, rather than raw attention.

- Other saliency methods that deal with some of the shortcomings of gradient x input, like Integrated Gradients. We are also keen to see more work investigating saliency methods of natural language generation beyond scoring single predictions. Interesting directions include .

- Interesting directions that examine hidden state evolution include the Canonical Correlation Analysis (CCA) line of investigation and, more recently, the work of De Cao et al.

We hope such tools will enable researchers and engineers to build intuitions to aid their work in understanding and improving these architecture.

–>

Conclusion

This concludes the first article in the series. Be sure to click on the notebooks and play with Ecco! I would love your feedback on this article, series, and on Ecco in this thread. If you find interesting factors or neurons, feel free to post them there as well. I welcome all feedback!

Acknowledgements

This article was vastly improved thanks to feedback on earlier drafts provided by

Abdullah Almaatouq,

Ahmad Alwosheel,

Anfal Alatawi,

Christopher Olah,

Fahd Alhazmi,

Hadeel Al-Negheimish,

Isabelle Augenstein,

Jasmijn Bastings,

Najla Alariefy,

Najwa Alghamdi,

Pepa Atanasova, and

Sebastian Gehrmann.

References

Citation

If you found this work helpful for your research, please cite it as following:

Alammar, J. (2020). Interfaces for Explaining Transformer Language Models

[Blog post]. Retrieved from https://jalammar.github.io/explaining-transformers/

BibTex:

@misc{alammar2020explaining,

title={Interfaces for Explaining Transformer Language Models},

author={Alammar, J},

year={2020},

url={https://jalammar.github.io/explaining-transformers/}

}

@article{poerner2018interpretable,

title={Interpretable textual neuron representations for NLP},

author={Poerner, Nina and Roth, Benjamin and Sch{“u}tze, Hinrich},

journal={arXiv preprint arXiv:1809.07291},

year={2018},

url={https://arxiv.org/pdf/1809.07291}

}

@misc{karpathy2015visualizing,

title={Visualizing and Understanding Recurrent Networks},

author={Andrej Karpathy and Justin Johnson and Li Fei-Fei},

year={2015},

eprint={1506.02078},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/pdf/1506.02078.pdf}

}

@article{olah2017feature,

title={Feature visualization},

author={Olah, Chris and Mordvintsev, Alexander and Schubert, Ludwig},

journal={Distill},

volume={2},

number={11},

pages={e7},

year={2017},

url={https://distill.pub/2017/feature-visualization/}

}

@article{olah2018building,

title={The building blocks of interpretability},

author={Olah, Chris and Satyanarayan, Arvind and Johnson, Ian and Carter, Shan and Schubert, Ludwig and Ye, Katherine and Mordvintsev, Alexander},

journal={Distill},

volume={3},

number={3},

pages={e10},

year={2018},

url={https://distill.pub/2018/building-blocks/}

}

@article{abnar2020quantifying,

title={Quantifying Attention Flow in Transformers},

author={Abnar, Samira and Zuidema, Willem},

journal={arXiv preprint arXiv:2005.00928},

year={2020},

url={https://arxiv.org/pdf/2005.00928}

}

@article{li2015visualizing,

title={Visualizing and understanding neural models in nlp},

author={Li, Jiwei and Chen, Xinlei and Hovy, Eduard and Jurafsky, Dan},

journal={arXiv preprint arXiv:1506.01066},

year={2015},

url={https://arxiv.org/pdf/1506.01066}

}

@article{poerner2018interpretable,

title={Interpretable textual neuron representations for NLP},

author={Poerner, Nina and Roth, Benjamin and Sch{“u}tze, Hinrich},

journal={arXiv preprint arXiv:1809.07291},

year={2018},

url={https://arxiv.org/pdf/1809.07291}

}

@inproceedings{park2019sanvis,

title={SANVis: Visual Analytics for Understanding Self-Attention Networks},

author={Park, Cheonbok and Na, Inyoup and Jo, Yongjang and Shin, Sungbok and Yoo, Jaehyo and Kwon, Bum Chul and Zhao, Jian and Noh, Hyungjong and Lee, Yeonsoo and Choo, Jaegul},

booktitle={2019 IEEE Visualization Conference (VIS)},

pages={146–150},

year={2019},

organization={IEEE},

url={https://arxiv.org/pdf/1909.09595}

}

@misc{nostalgebraist2020,

title={interpreting GPT: the logit lens},

url={https://www.lesswrong.com/posts/AcKRB8wDpdaN6v6ru/interpreting-gpt-the-logit-lens},

year={2020},

author={nostalgebraist}

}

@article{vig2019analyzing,

title={Analyzing the structure of attention in a transformer language model},

author={Vig, Jesse and Belinkov, Yonatan},

journal={arXiv preprint arXiv:1906.04284},

year={2019},

url={https://arxiv.org/pdf/1906.04284}

}

@inproceedings{hoover2020,

title = “ex{BERT}: A Visual Analysis Tool to Explore Learned Representations in {T}ransformer Models”,

author = “Hoover, Benjamin and Strobelt, Hendrik and Gehrmann, Sebastian”,

booktitle = “Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics: System Demonstrations”,

month = jul,

year = “2020”,

address = “Online”,

publisher = “Association for Computational Linguistics”,

url = “https://www.aclweb.org/anthology/2020.acl-demos.22”,

pages = “187–196”

}

@article{jones2017,

title= “Tensor2tensor transformer visualization”,

author=”Llion Jones”,

year=”2017″,

url=”https://github.com/tensorflow/tensor2tensor/tree/master/tensor2tensor/visualization”

}

@article{voita2019bottom,

title={The bottom-up evolution of representations in the transformer: A study with machine translation and language modeling objectives},

author={Voita, Elena and Sennrich, Rico and Titov, Ivan},

journal={arXiv preprint arXiv:1909.01380},

year={2019},

url={https://arxiv.org/pdf/1909.01380.pdf}

}

@misc{bastings2020elephant,

title={The elephant in the interpretability room: Why use attention as explanation when we have saliency methods?},

author={Jasmijn Bastings and Katja Filippova},

year={2020},

eprint={2010.05607},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/2010.05607.pdf}

}

@article{linzen2016assessing,

title={Assessing the ability of LSTMs to learn syntax-sensitive dependencies},

author={Linzen, Tal and Dupoux, Emmanuel and Goldberg, Yoav},

journal={Transactions of the Association for Computational Linguistics},

volume={4},

pages={521–535},

year={2016},

publisher={MIT Press}

}

@book{tufte2006beautiful,

title={Beautiful evidence},

author={Tufte, Edward R},

year={2006},

publisher={Graphis Pr}

}

@article{pedregosa2011scikit,

title={Scikit-learn: Machine learning in Python},

author={Pedregosa, Fabian and Varoquaux, Ga{“e}l and Gramfort, Alexandre and Michel, Vincent and Thirion, Bertrand and Grisel, Olivier and Blondel, Mathieu and Prettenhofer, Peter and Weiss, Ron and Dubourg, Vincent and others},

journal={the Journal of machine Learning research},

volume={12},

pages={2825–2830},

year={2011},

publisher={JMLR. org},

url={https://www.jmlr.org/papers/volume12/pedregosa11a/pedregosa11a.pdf}

}

@article{walt2011numpy,

title={The NumPy array: a structure for efficient numerical computation},

author={Walt, St{‘e}fan van der and Colbert, S Chris and Varoquaux, Gael},

journal={Computing in science & engineering},

volume={13},

number={2},

pages={22–30},

year={2011},

publisher={IEEE Computer Society},

url={https://hal.inria.fr/inria-00564007/document}

}

@article{wolf2019huggingface,

title={HuggingFace’s Transformers: State-of-the-art Natural Language Processing},

author={Wolf, Thomas and Debut, Lysandre and Sanh, Victor and Chaumond, Julien and Delangue, Clement and Moi, Anthony and Cistac, Pierric and Rault, Tim and Louf, R{‘e}mi and Funtowicz, Morgan and others},

journal={ArXiv},

pages={arXiv–1910},

year={2019},

url={https://www.aclweb.org/anthology/2020.emnlp-demos.6.pdf}

}

@article{bostock2012d3,

title={D3. js-data-driven documents},

author={Bostock, Mike and others},

journal={l{‘i}nea]. Disponible en: https://d3js. org/.[Accedido: 17-sep-2019]},

year={2012}

}

@article{ragan2014jupyter,

title={The Jupyter/IPython architecture: a unified view of computational research, from interactive exploration to communication and publication.},

author={Ragan-Kelley, Min and Perez, F and Granger, B and Kluyver, T and Ivanov, P and Frederic, J and Bussonnier, M},

journal={AGUFM},

volume={2014},

pages={H44D–07},

year={2014},

url={https://ui.adsabs.harvard.edu/abs/2014AGUFM.H44D..07R/abstract}

}

@article{kokhlikyan2020captum,

title={Captum: A unified and generic model interpretability library for PyTorch},

author={Kokhlikyan, Narine and Miglani, Vivek and Martin, Miguel and Wang, Edward and Alsallakh, Bilal and Reynolds, Jonathan and Melnikov, Alexander and Kliushkina, Natalia and Araya, Carlos and Yan, Siqi and others},

journal={arXiv preprint arXiv:2009.07896},

year={2020}

}

@misc{li2016visualizing,

title={Visualizing and Understanding Neural Models in NLP},

author={Jiwei Li and Xinlei Chen and Eduard Hovy and Dan Jurafsky},

year={2016},

eprint={1506.01066},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@misc{radford2017learning,

title={Learning to Generate Reviews and Discovering Sentiment},

author={Alec Radford and Rafal Jozefowicz and Ilya Sutskever},

year={2017},

eprint={1704.01444},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/pdf/1704.01444.pdf}

}

@misc{liu2019linguistic,

title={Linguistic Knowledge and Transferability of Contextual Representations},

author={Nelson F. Liu and Matt Gardner and Yonatan Belinkov and Matthew E. Peters and Noah A. Smith},

year={2019},

eprint={1903.08855},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/1903.08855.pdf}

}

@misc{rogers2020primer,

title={A Primer in BERTology: What we know about how BERT works},

author={Anna Rogers and Olga Kovaleva and Anna Rumshisky},

year={2020},

eprint={2002.12327},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/2002.12327.pdf}

}

@article{cammarata2020thread,

author = {Cammarata, Nick and Carter, Shan and Goh, Gabriel and Olah, Chris and Petrov, Michael and Schubert, Ludwig},

title = {Thread: Circuits},

journal = {Distill},

year = {2020},

note = {https://distill.pub/2020/circuits},

doi = {10.23915/distill.00024},

url={https://distill.pub/2020/circuits/}

}

@article{victor2013media,

title={Media for thinking the unthinkable},

author={Victor, Bret},

journal={Vimeo, May},

year={2013}

}

@article{molnar2020interpretable,

title={Interpretable Machine Learning–A Brief History, State-of-the-Art and Challenges},

author={Molnar, Christoph and Casalicchio, Giuseppe and Bischl, Bernd},

journal={arXiv preprint arXiv:2010.09337},

year={2020},

url={https://arxiv.org/pdf/2010.09337.pdf}

}

@inproceedings{vaswani2017attention,

title={Attention is all you need},

author={Vaswani, Ashish and Shazeer, Noam and Parmar, Niki and Uszkoreit, Jakob and Jones, Llion and Gomez, Aidan N and Kaiser, {L}ukasz and Polosukhin, Illia},

booktitle={Advances in neural information processing systems},

pages={5998–6008},

year={2017},

url={https://papers.nips.cc/paper/7181-attention-is-all-you-need.pdf}

}

@article{liu2018generating,

title={Generating wikipedia by summarizing long sequences},

author={Liu, Peter J and Saleh, Mohammad and Pot, Etienne and Goodrich, Ben and Sepassi, Ryan and Kaiser, Lukasz and Shazeer, Noam},

journal={arXiv preprint arXiv:1801.10198},

year={2018},

url={https://arxiv.org/pdf/1801.10198}

}

@misc{radford2018improving,

title={Improving language understanding by generative pre-training},

author={Radford, Alec and Narasimhan, Karthik and Salimans, Tim and Sutskever, Ilya},

year={2018},

url={https://www.cs.ubc.ca/~amuham01/LING530/papers/radford2018improving.pdf}

}

@article{radford2019language,

title={Language models are unsupervised multitask learners},

author={Radford, Alec and Wu, Jeffrey and Child, Rewon and Luan, David and Amodei, Dario and Sutskever, Ilya},

journal={OpenAI blog},

volume={1},

number={8},

pages={9},

year={2019},

url={https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf}

}

@article{brown2020language,

title={Language models are few-shot learners},

author={Brown, Tom B and Mann, Benjamin and Ryder, Nick and Subbiah, Melanie and Kaplan, Jared and Dhariwal, Prafulla and Neelakantan, Arvind and Shyam, Pranav and Sastry, Girish and Askell, Amanda and others},

journal={arXiv preprint arXiv:2005.14165},

year={2020},

url={https://arxiv.org/pdf/2005.14165.pdf}

}

@article{devlin2018bert,

title={Bert: Pre-training of deep bidirectional transformers for language understanding},

author={Devlin, Jacob and Chang, Ming-Wei and Lee, Kenton and Toutanova, Kristina},

journal={arXiv preprint arXiv:1810.04805},

year={2018},

url={https://arxiv.org/pdf/1810.04805.pdf}

}

@article{liu2019roberta,

title={Roberta: A robustly optimized bert pretraining approach},

author={Liu, Yinhan and Ott, Myle and Goyal, Naman and Du, Jingfei and Joshi, Mandar and Chen, Danqi and Levy, Omer and Lewis, Mike and Zettlemoyer, Luke and Stoyanov, Veselin},

journal={arXiv preprint arXiv:1907.11692},

year={2019},

url={https://arxiv.org/pdf/1907.11692}

}

@article{lan2019albert,

title={Albert: A lite bert for self-supervised learning of language representations},

author={Lan, Zhenzhong and Chen, Mingda and Goodman, Sebastian and Gimpel, Kevin and Sharma, Piyush and Soricut, Radu},

journal={arXiv preprint arXiv:1909.11942},

year={2019},

url={https://arxiv.org/pdf/1909.11942.pdf}

}

@article{lewis2019bart,

title={Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension},

author={Lewis, Mike and Liu, Yinhan and Goyal, Naman and Ghazvininejad, Marjan and Mohamed, Abdelrahman and Levy, Omer and Stoyanov, Ves and Zettlemoyer, Luke},

journal={arXiv preprint arXiv:1910.13461},

year={2019},

url={https://arxiv.org/pdf/1910.13461}

}

@article{raffel2019exploring,

title={Exploring the limits of transfer learning with a unified text-to-text transformer},

author={Raffel, Colin and Shazeer, Noam and Roberts, Adam and Lee, Katherine and Narang, Sharan and Matena, Michael and Zhou, Yanqi and Li, Wei and Liu, Peter J},

journal={arXiv preprint arXiv:1910.10683},

year={2019},

url={https://arxiv.org/pdf/1910.10683}

}

@article{dosovitskiy2020image,

title={An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale},

author={Dosovitskiy, Alexey and Beyer, Lucas and Kolesnikov, Alexander and Weissenborn, Dirk and Zhai, Xiaohua and Unterthiner, Thomas and Dehghani, Mostafa and Minderer, Matthias and Heigold, Georg and Gelly, Sylvain and others},

journal={arXiv preprint arXiv:2010.11929},

year={2020},

url={https://arxiv.org/pdf/2010.11929.pdf}

}

@article{zhao2019gender,

title={Gender bias in contextualized word embeddings},

author={Zhao, Jieyu and Wang, Tianlu and Yatskar, Mark and Cotterell, Ryan and Ordonez, Vicente and Chang, Kai-Wei},

journal={arXiv preprint arXiv:1904.03310},

year={2019},

url={https://arxiv.org/pdf/1904.03310.pdf}

}

@article{kurita2019measuring,

title={Measuring bias in contextualized word representations},

author={Kurita, Keita and Vyas, Nidhi and Pareek, Ayush and Black, Alan W and Tsvetkov, Yulia},

journal={arXiv preprint arXiv:1906.07337},

year={2019},

url={https://arxiv.org/pdf/1906.07337.pdf}

}

@article{basta2019evaluating,

title={Evaluating the underlying gender bias in contextualized word embeddings},

author={Basta, Christine and Costa-Juss{`a}, Marta R and Casas, Noe},

journal={arXiv preprint arXiv:1904.08783},

year={2019},

url={https://arxiv.org/pdf/1904.08783.pdf}

}

@misc{atanasova2020diagnostic,

title={A Diagnostic Study of Explainability Techniques for Text Classification},

author={Pepa Atanasova and Jakob Grue Simonsen and Christina Lioma and Isabelle Augenstein},

year={2020},

eprint={2009.13295},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/2009.13295.pdf}

}

@article{madsen2019visualizing,

author = {Madsen, Andreas},

title = {Visualizing memorization in RNNs},

journal = {Distill},

year = {2019},

note = {https://distill.pub/2019/memorization-in-rnns},

doi = {10.23915/distill.00016},

url={https://distill.pub/2019/memorization-in-rnns/}

}

@misc{vig2019visualizing,

title={Visualizing Attention in Transformer-Based Language Representation Models},

author={Jesse Vig},

year={2019},

eprint={1904.02679},

archivePrefix={arXiv},

primaryClass={cs.HC},

url={https://arxiv.org/pdf/1904.02679}

}

@misc{tenney2020language,

title={The Language Interpretability Tool: Extensible, Interactive Visualizations and Analysis for NLP Models},

author={Ian Tenney and James Wexler and Jasmijn Bastings and Tolga Bolukbasi and Andy Coenen and Sebastian Gehrmann and Ellen Jiang and Mahima Pushkarna and Carey Radebaugh and Emily Reif and Ann Yuan},

year={2020},

eprint={2008.05122},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/2008.05122}

}

@article{wallace2019allennlp,

title={Allennlp interpret: A framework for explaining predictions of nlp models},

author={Wallace, Eric and Tuyls, Jens and Wang, Junlin and Subramanian, Sanjay and Gardner, Matt and Singh, Sameer},

journal={arXiv preprint arXiv:1909.09251},

year={2019},

url={https://arxiv.org/pdf/1909.09251.pdf}

}

@misc{zhang2020dialogpt,

title={DialoGPT: Large-Scale Generative Pre-training for Conversational Response Generation},

author={Yizhe Zhang and Siqi Sun and Michel Galley and Yen-Chun Chen and Chris Brockett and Xiang Gao and Jianfeng Gao and Jingjing Liu and Bill Dolan},

year={2020},

eprint={1911.00536},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/1911.00536}

}

@misc{sanh2020distilbert,

title={DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter},

author={Victor Sanh and Lysandre Debut and Julien Chaumond and Thomas Wolf},

year={2020},

eprint={1910.01108},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/1910.01108}

}

@misc{shrikumar2017just,

title={Not Just a Black Box: Learning Important Features Through Propagating Activation Differences},

author={Avanti Shrikumar and Peyton Greenside and Anna Shcherbina and Anshul Kundaje},

year={2017},

eprint={1605.01713},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/pdf/1605.01713}

}

@misc{denil2015extraction,

title={Extraction of Salient Sentences from Labelled Documents},

author={Misha Denil and Alban Demiraj and Nando de Freitas},

year={2015},

eprint={1412.6815},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/1412.6815.pdf}

}

@misc{webster2020measuring,

title={Measuring and Reducing Gendered Correlations in Pre-trained Models},

author={Kellie Webster and Xuezhi Wang and Ian Tenney and Alex Beutel and Emily Pitler and Ellie Pavlick and Jilin Chen and Slav Petrov},

year={2020},

eprint={2010.06032},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/2010.06032}

}

@article{arrieta2020explainable,

title={Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI},

author={Arrieta, Alejandro Barredo and D{‘i}az-Rodr{‘i}guez, Natalia and Del Ser, Javier and Bennetot, Adrien and Tabik, Siham and Barbado, Alberto and Garc{‘i}a, Salvador and Gil-L{‘o}pez, Sergio and Molina, Daniel and Benjamins, Richard and others},

journal={Information Fusion},

volume={58},

pages={82–115},

year={2020},

publisher={Elsevier},

url={https://arxiv.org/pdf/1910.10045.pdf}

}

@article{tsang2020does,

title={How does this interaction affect me? Interpretable attribution for feature interactions},

author={Tsang, Michael and Rambhatla, Sirisha and Liu, Yan},

journal={arXiv preprint arXiv:2006.10965},

year={2020},

url={https://arxiv.org/pdf/2006.10965.pdf}

}

@misc{swayamdipta2020dataset,

title={Dataset Cartography: Mapping and Diagnosing Datasets with Training Dynamics},

author={Swabha Swayamdipta and Roy Schwartz and Nicholas Lourie and Yizhong Wang and Hannaneh Hajishirzi and Noah A. Smith and Yejin Choi},

year={2020},

eprint={2009.10795},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/2009.10795}

}

@article{han2020explaining,

title={Explaining Black Box Predictions and Unveiling Data Artifacts through Influence Functions},

author={Han, Xiaochuang and Wallace, Byron C and Tsvetkov, Yulia},

journal={arXiv preprint arXiv:2005.06676},

year={2020},

url={https://arxiv.org/pdf/2005.06676.pdf}

}

@article{alammar2018illustrated,

title={The illustrated transformer},

author={Alammar, Jay},

journal={The Illustrated Transformer–Jay Alammar–Visualizing Machine Learning One Concept at a Time},

volume={27},

year={2018},

url={https://jalammar.github.io/illustrated-transformer/}

}

@misc{sundararajan2017axiomatic,

title={Axiomatic Attribution for Deep Networks},

author={Mukund Sundararajan and Ankur Taly and Qiqi Yan},

year={2017},

eprint={1703.01365},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/pdf/1703.01365}

}

@misc{strobelt2017lstmvis,

title={LSTMVis: A Tool for Visual Analysis of Hidden State Dynamics in Recurrent Neural Networks},

author={Hendrik Strobelt and Sebastian Gehrmann and Hanspeter Pfister and Alexander M. Rush},

year={2017},

eprint={1606.07461},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/1606.07461.pdf}

}

@inproceedings{dalvi2019neurox,

title={NeuroX: A toolkit for analyzing individual neurons in neural networks},

author={Dalvi, Fahim and Nortonsmith, Avery and Bau, Anthony and Belinkov, Yonatan and Sajjad, Hassan and Durrani, Nadir and Glass, James},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={33},

pages={9851–9852},

year={2019},

url={https://arxiv.org/pdf/1812.09359.pdf}

}

@article{de2020decisions,

title={How do decisions emerge across layers in neural models? interpretation with differentiable masking},

author={De Cao, Nicola and Schlichtkrull, Michael and Aziz, Wilker and Titov, Ivan},

journal={arXiv preprint arXiv:2004.14992},

year={2020},

url={https://arxiv.org/pdf/2004.14992.pdf}

}

@inproceedings{morcos2018insights,

title={Insights on representational similarity in neural networks with canonical correlation},

author={Morcos, Ari and Raghu, Maithra and Bengio, Samy},

booktitle={Advances in Neural Information Processing Systems},

pages={5727–5736},

year={2018},

url={https://papers.nips.cc/paper/2018/file/a7a3d70c6d17a73140918996d03c014f-Paper.pdf}

}

@inproceedings{raghu2017svcca,

title={Svcca: Singular vector canonical correlation analysis for deep learning dynamics and interpretability},

author={Raghu, Maithra and Gilmer, Justin and Yosinski, Jason and Sohl-Dickstein, Jascha},

booktitle={Advances in Neural Information Processing Systems},

pages={6076–6085},

year={2017},

url={https://papers.nips.cc/paper/2017/file/dc6a7e655d7e5840e66733e9ee67cc69-Paper.pdf}

}

@incollection{hotelling1992relations,

title={Relations between two sets of variates},

author={Hotelling, Harold},

booktitle={Breakthroughs in statistics},

pages={162–190},

year={1992},

publisher={Springer}

}

@article{massarelli2019decoding,

title={How decoding strategies affect the verifiability of generated text},

author={Massarelli, Luca and Petroni, Fabio and Piktus, Aleksandra and Ott, Myle and Rockt{“a}schel, Tim and Plachouras, Vassilis and Silvestri, Fabrizio and Riedel, Sebastian},

journal={arXiv preprint arXiv:1911.03587},

year={2019},

url={https://arxiv.org/pdf/1911.03587.pdf}

}

@misc{holtzman2020curious,

title={The Curious Case of Neural Text Degeneration},

author={Ari Holtzman and Jan Buys and Li Du and Maxwell Forbes and Yejin Choi},

year={2020},

eprint={1904.09751},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/1904.09751.pdf}

}

@article{petroni2020context,

title={How Context Affects Language Models’ Factual Predictions},

author={Petroni, Fabio and Lewis, Patrick and Piktus, Aleksandra and Rockt{“a}schel, Tim and Wu, Yuxiang and Miller, Alexander H and Riedel, Sebastian},

journal={arXiv preprint arXiv:2005.04611},

year={2020},

url={https://arxiv.org/pdf/2005.04611.pdf}

}

@misc{ribeiro2016whyribeiro2016why,

title={“Why Should I Trust You?”: Explaining the Predictions of Any Classifier},

author={Marco Tulio Ribeiro and Sameer Singh and Carlos Guestrin},

year={2016},

eprint={1602.04938},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/pdf/1602.04938.pdf}

}

@misc{du2019techniques,

title={Techniques for Interpretable Machine Learning},

author={Mengnan Du and Ninghao Liu and Xia Hu},

year={2019},

eprint={1808.00033},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/pdf/1808.00033.pdf}

}

@article{carvalho2019machine,

title={Machine learning interpretability: A survey on methods and metrics},

author={Carvalho, Diogo V and Pereira, Eduardo M and Cardoso, Jaime S},

journal={Electronics},

volume={8},

number={8},

pages={832},

year={2019},

publisher={Multidisciplinary Digital Publishing Institute},

url={https://www.mdpi.com/2079-9292/8/8/832/pdf}

}

@misc{durrani2020analyzing,

title={Analyzing Individual Neurons in Pre-trained Language Models},

author={Nadir Durrani and Hassan Sajjad and Fahim Dalvi and Yonatan Belinkov},

year={2020},

eprint={2010.02695},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/pdf/2010.02695}

}